Let’s take a moment to reflect on everything that is going on with generative AI because what we are experiencing right now will define generations to come.

In my previous post “ChatGPT: hype or revolution?”, I discussed that while there was some short-term hype with ChatGPT, a revolution was clearly brewing. Well, I am here to tell you that the revolution is already in full swing. Today. The launch of GPT-4 marks a big milestone for many reasons. Not only GPT4 shows better than human capabilities at many tasks, but it is also multi-modal enabling mind-boggling applications that we just did not think possible only a few months ago. Let’s take a look at some of the amazing accomplishments by GPT-4 out of the box:

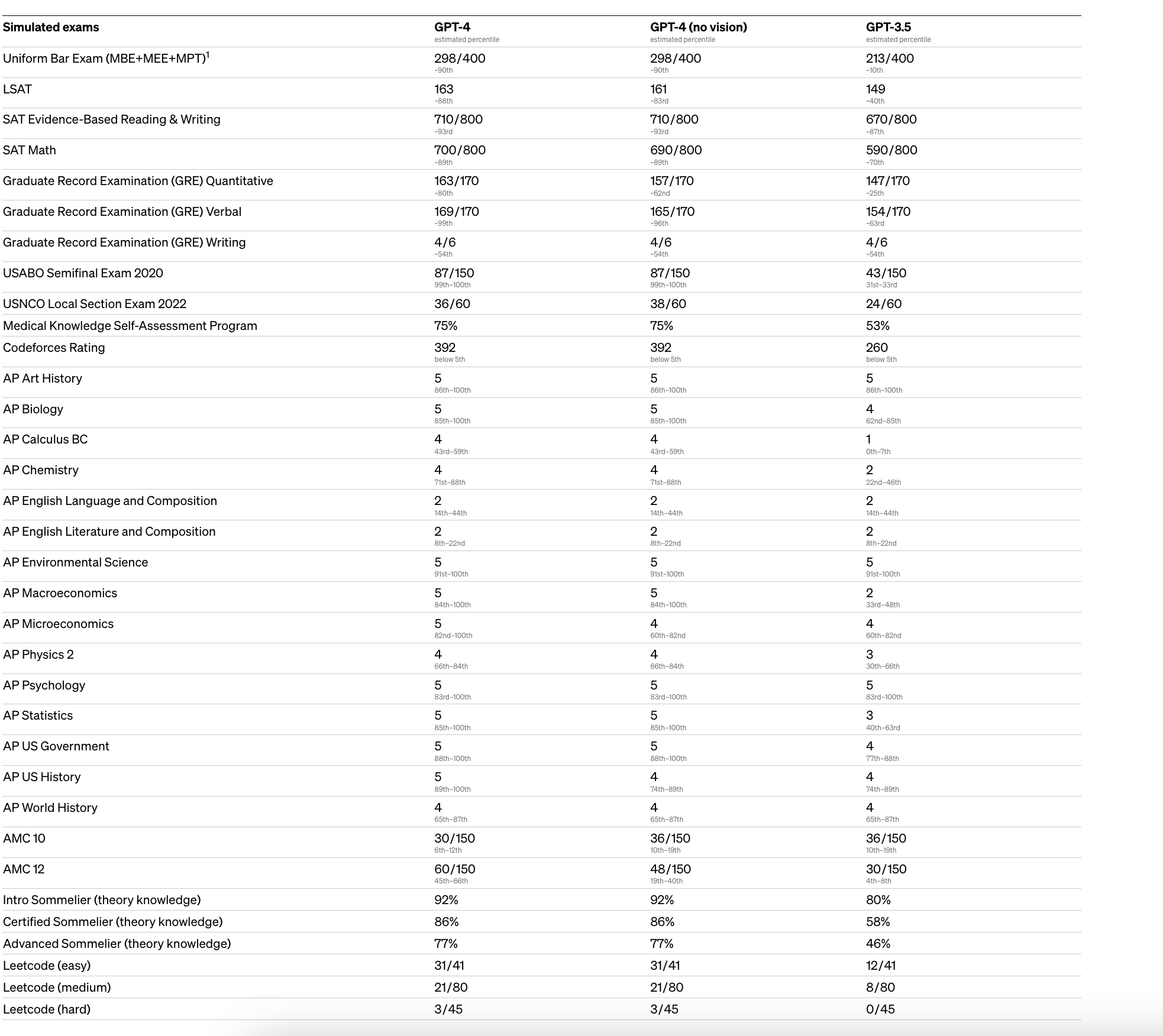

- It scores in the 90th percentile for the BAR exam to become a lawyer

- It scores in the 90th percentile for the SAT both in Math and Reading and Comprehension

- It exceeds the USMLE score required to graduate in medicine in the US by 20 points

- It shows “sparks of general artificial intelligence” according to some

- It is much better at coding than ChatGPT

Results of GPT-4 one several standard exams

Results of GPT-4 one several standard exams

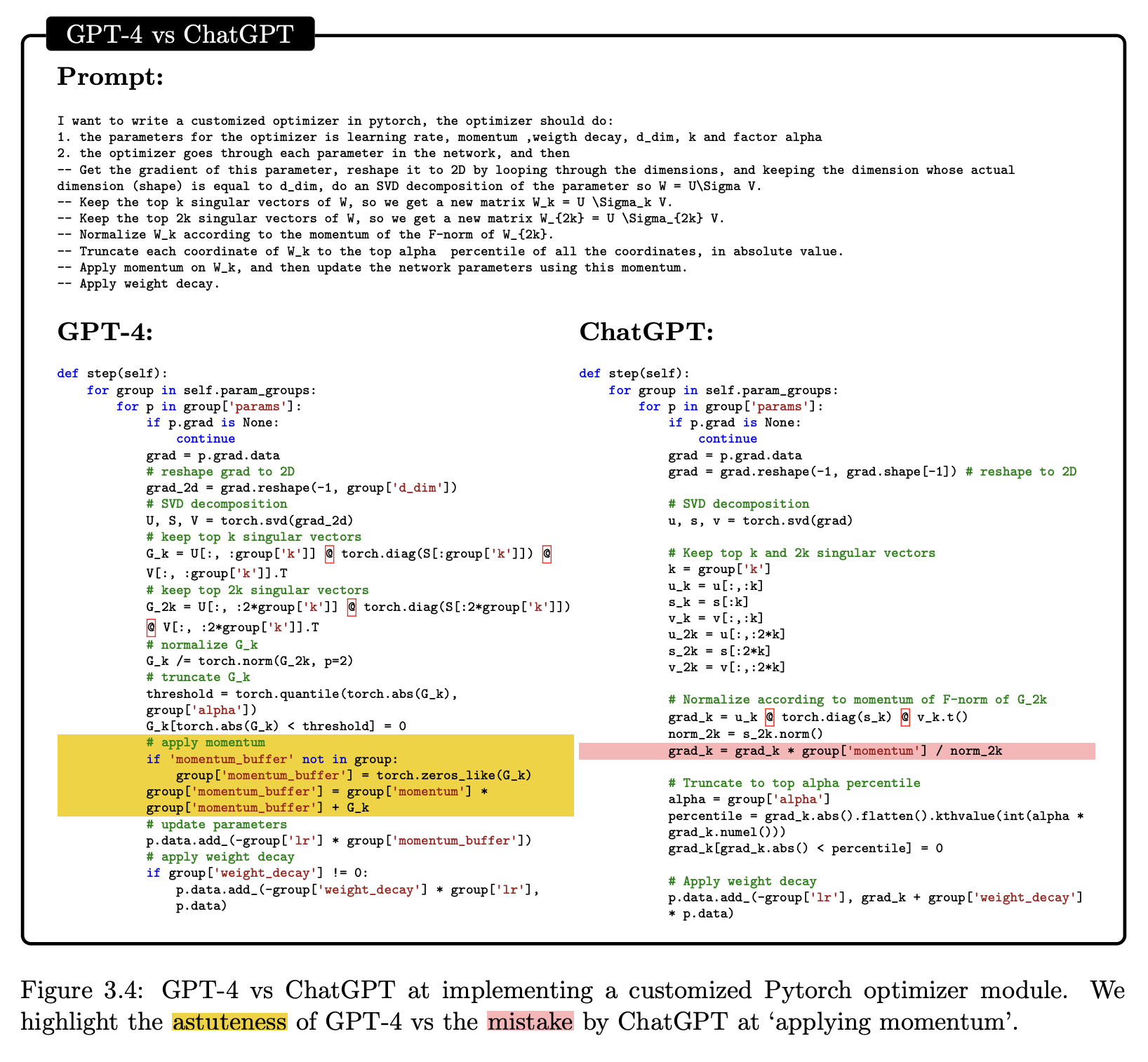

GPT-4 is much better in intricate coding tasks than ChatGPT

GPT-4 is much better in intricate coding tasks than ChatGPT

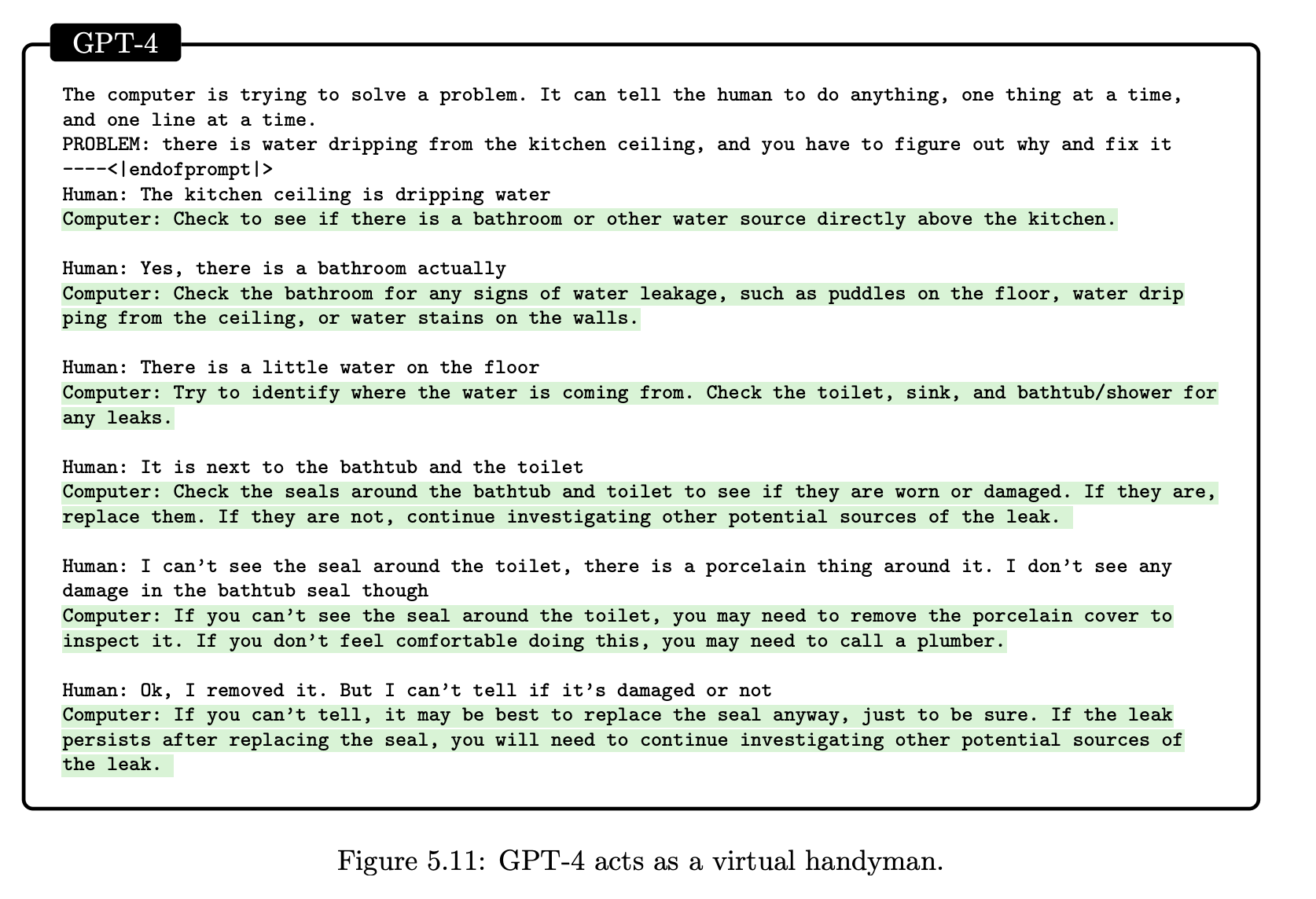

GPT-4 can solve real world problems

GPT-4 can solve real world problems

I have been using GPT-4 for months, and I have been very impressed. When I was at Curai, we saw huge improvements in all our medical tasks when moving from GPT-3.5 to GPT-4. And, I have continued to be impressed while using it at LinkedIn in the context of exploratory work and some of our recently announced product launches. Yes, it still has many shortcomings, but most of them are being addressed and will get much better soon. As someone who has been working on AI for over twenty years I can say with no hesitation that what has happened in the field in recent months/years really represents a huge leap. In the following sectiosn I dive into some of the questions regarding this point in time and what it means for the near future.

What will be disrupted by AI?

Everything. AI will transform almost any vertical and industry in the next few years. From healthcare to education or transportation. White and blue collar jobs will be transformed. A good way to think about it is to compare it to the Internet plus the mobile phone. Think about how both of these have impacted our world in the past few years. AI will impact as much as both of them combined.

This is of course both exciting and scary. As with any powerful technology, it will enable good people to do much more good, and bad people to do more evil. The solution is not to stop technological advancements, but to anticipate bad consequences to prevent them. With AI, it feels like for the first time in history we are doing that. For years there has been a big focus on responsible AI and adjacent topics such as transparency or bias. That is not to say that all those problems have been avoided or solved, but did you ever hear about “responsible internet” when the internet revolution was happening?

So, I think there are more reasons to be excited than to be scared. But, it is important to anticipate and prevent bad outcomes. And, it is also important to get society ready for the changes that are happening and coming. This includes lots of education, and, yes, some regulation.

So, what has happened now?

As many scientists will try to convince you, nothing really revolutionary has happened on the technical side. The current revolution has happened mostly because of the accumulation of several recent (and not so recent) discoveries coming into place at once.

The first one is Deep Learning. Deep learning, which some dismissed at first as “just overfitted large neural networks” when it re-appeared in the spotlight ten years ago, went from classifying cats to much more impressive tasks like voice recognition or beating Go and Poker professional players.

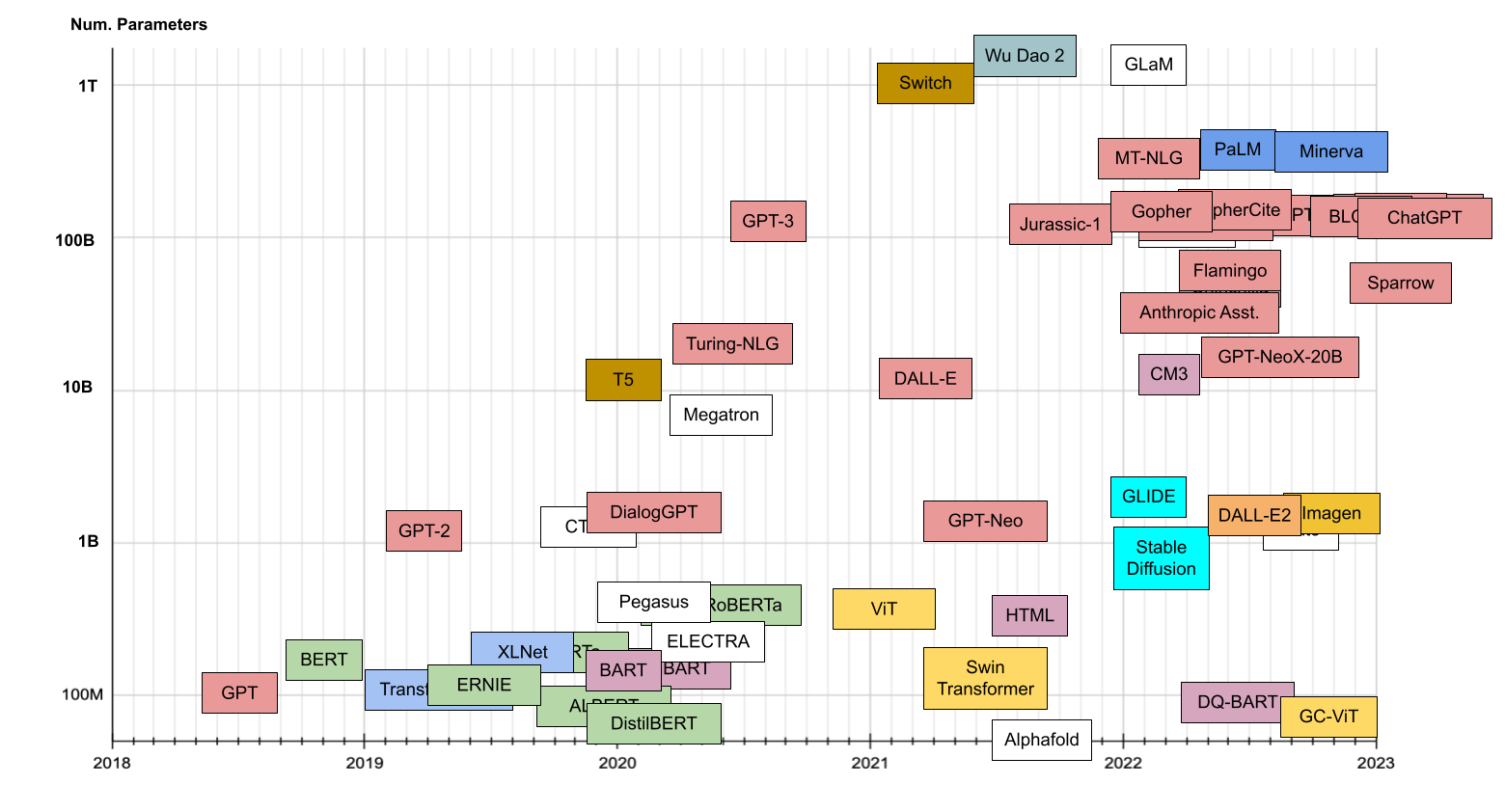

Only five years ago, a novel deep learning architecture, the Transformer, grabbed everyone’s attention (pun intended). In only five years, there has been an explosion of Transformer models. You can read all about them in my now popular Transformer catalog.

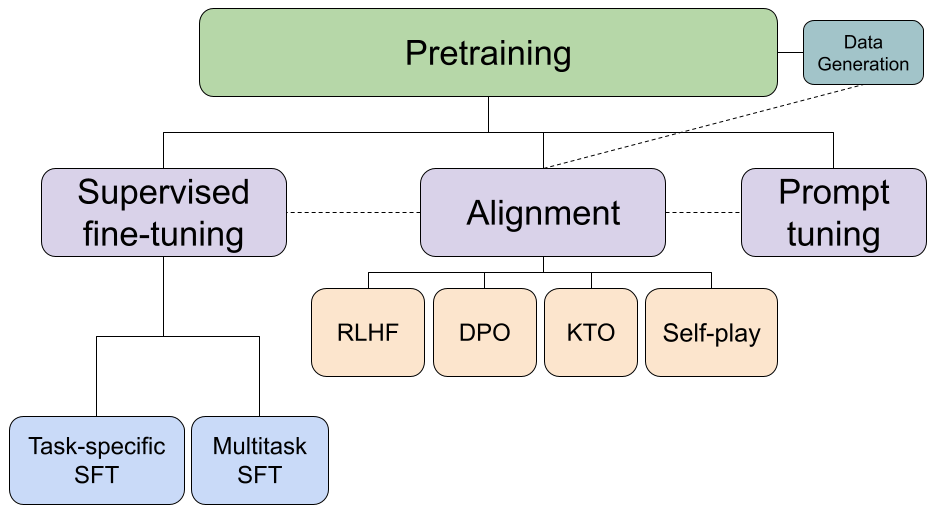

The exponential growth in number of models and model size in only five years has been accompanied by an exponential growth in their capabilities. It is beyond the scope of this post to detail all the innovations that have enabled these models to become so much better in such a short time, but I will list the main ones:

- The fundamental attention mechanism introduced in the original paper

- Improvement in training processes (and in GPU hardware) have enabled training much larger models on much larger datasets. Most recent models are trained on almost all of the internet… and some.

- Reinforcement learning with human feedback, which was more recently introduced, has quickly improved “reasoning” capabilities and alignment.

Now it is important to remember that these large language models have still only been pre-trained to predict the next token (~word). However, we still don’t know why, but there are clearly some “emerging abilities” once those models get large enough. The addition of the RLHF has furthermore introduced another level of emergent abilities that are still hard to explain.

In summary, there have been a few discoveries that have all come together and enabled this revolution. However, we still don’t fully understand why all those things work so well together.

Is GPT-4 AGI? Is it conscious?

The obvious answer to those questions is of course not. The not-so-obvious answer is “who cares?”. In my opinion AGI (Artificial General Intelligence) is a misnomer. We usually talk of general intelligence when we mean “human intelligence”. Human intelligence is actually not as general as we might want to think and is very much constrained by our physical experience, senses, and worldview. One can easily think of AI that will be far less constrained and therefore more “general”.

On the other hand, while we do probably want AI models over time to be more general purpose, the reality is that we might be better off with different models who are each “superhuman” at whatever they do. Think about it: if we had an AI that was better than the best human doctors at medical reasoning and another AI that was better than the best lawyers at legal reasoning why would we care about having an AI that is both of them?

The question about consciousness is philosophically very interesting, and I don’t want to dismiss it. But, in order to answer a question like that, we would first need to agree on what consciousness is. Read any book about philosophy of mind and you will quickly realize that there is no commonly accepted definition (in fact, there is a “the problem of definition” in the Wikipedia article. So, the answer to is GPT-4 conscious is that it is not according to many definitions of consciousness, but it is according to some.

There is though a more interesting question than those two: can GPT-4 reason? That is an important question, and its answer is a bit controversial. Some researchers will argue that a model that has been trained in the way that GPT-4 has been trained can never reason. Interestingly, both Yann LeCun and Gary Marcus, who have been battling out on the issue of reasoning on other approaches agree that large language models “cannot reason”. I think they are both wrong.

Reasoning is not a black or white binary label that requires a specific fundamental approach. As opposed to consciousness, it is not a philosophical concept either. Reasoning can be measured, and is task specific. Therefore GPT-4 does reason in many contexts and for many tasks. This is obvious when you read the “Sparks of artificial general intelligence” report, which I wished had been entitled something more along the lines of “GPT-4 reasoning capabilities”.

Will GPT-4 replace all other AI?

No.

To start, GPT-4 is not the “end” of anything, but just the beginning. Not even Transformers are the end of anything. I would be disappointed if in say a couple of years we had not found a better architecture than Transformers. We can and will have much more powerful and efficient models that will be able to better reason. For sure.

On the other hand, as powerful as GPT-4 is, and as much as it can solve many tasks, it is not very efficient in many ways. GPT-4 is very complex and requires a lot of computing and energy not only to be trained, but even for inference. It would be silly to use it to replace e.g. XGBoost in many cases where this much simpler and efficient model can get even comparable results. There are other limitations of models like GPT-4 and you might hear about them. For example, they have been trained on older data (up to 2021) and it is hard to get proprietary data into them. However, those are not really fundamental limitations and they are being addressed very quickly. For example, the new chatgpt-retrieval-plugin can be used to easily address those two shortcomings. But, the efficiency/ROI of these huge models will remain a real thing in the next few years, where you should expect models to become both more efficient, but also more complex and larger, almost in parallel.

Conclusion

GPT-4, together with the previous ChatGPT, represents an inflection point in Artificial Intelligence. It is not only that the model has amazing capabilities that would have been unthinkable a few months back, but it is mostly about how these two data points illustrate the mind boggling increase in our rate of innovation. Even if you still believe GPT-4 is not impressive, project what you have seen in the past few months two years forward. What do you see?

So, yes, as Bill Gates said, the Age of AI has begun.

Btw, if you are able to cope with Lex Fridman, this interview with Sam Altman, has some interesting tidbits about GPT-4. Also, take a look at the book that Reid Hoffman wrote using GPT-4 and his conversation about writing the book.